Photo credits: Claudine Gossett

On the Theory of Learning Invariants

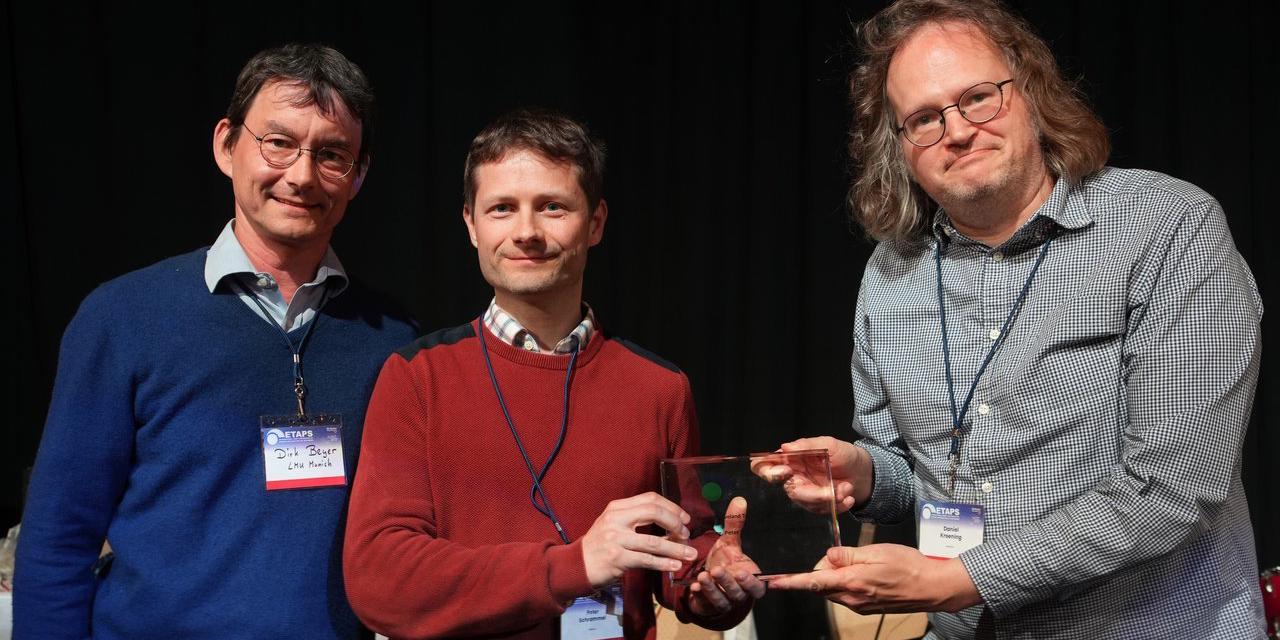

by Martin Tappler — 3 June 2025

Topics: Interview

Could you briefly introduce yourself and your research area?

Hi! I’m Yotam Feldman, currently a Schmidt Science Postdoctoral Fellow at Tel Aviv University, Israel. I was extremely fortunate to do my PhD at Tel Aviv University with two incredible mentors, Prof. Sharon Shoham and Prof. Mooly Sagiv. My PhD work focused on algorithmic formal verification, especially from a theoretical perspective. I’ve also done proof arguments for highly concurrent data structures. I’m immensely grateful to the committee and the ETAPS association for recognizing my dissertation.

Your work focuses on invariant inference. Could you explain what invariant inference is, what these algorithms are used for, and how they relate to machine learning?

Invariant inference is a method of proving the safety of transition systems by automatically finding an inductive invariant (or loop invariant): a property that is guaranteed to hold after a step of the program if it starts from a state that satisfies the same property. It’s useful because instead of considering long or infinite executions, you need to consider just a single step of the program—if you’ve managed to find such an invariant. SAT-based invariant inference algorithms find inductive invariants automatically, by translating the program and its specification to formulas in logic and then performing symbolic reasoning with the help of tools like SAT/SMT solvers. It’s amazing to see how widely applicable invariant inference algorithms have become—from hardware model checking to C/C++ code to distributed systems.

In my PhD we were trying to lay theoretical foundations that could explain the design principles underlying SAT-based invariant inference algorithms such as McMillan’s Interpolation-based invariant inference and Bradley’s IC3/PDR. These algorithms had completely upended the field, but the reasons for their success were not well understood from a theoretical standpoint. To our surprise, it turns out machine learning theory has a lot to say about invariant inference—not just the high-level inspiration of invariant inference as a (seemingly very different) learning problem, but also the deep ideas and even the technical details too. For instance, we found that Interpolation-based inference can be seen as a reincarnation of learning algorithms, where instead of the classical equivalence and membership queries put forth by Angluin in 1987, the invariant inference algorithm uses bounded model checking to the same—or similar—effect. The connection allows us to prove complexity bounds on Interpolation-based inference, cases where we know that the algorithm would succeed in finding a complex invariant in a polynomial number of SAT calls.

The learning perspective can also teach us about the power of the expressive SAT queries, proving exponential separation between theoretical models for invariant inference that are inspired by models of learning with queries; and about generalization in IC3, where learning theory provides the technical machinery to understand what’s going on.

What was the biggest challenge you encountered during your PhD, and how did you overcome it?

Probably not the biggest, but one that’s on my mind nowadays—I had great collaborations and learned a lot from earlier projects too, but it took me a few years to arrive at the topic that eventually became my PhD dissertation. This is when I finally felt that the research became a bit more streamlined, and I had more confidence. It was a miracle (enabled by perseverance), and it could easily not have happened—and it would have been fine. My dissertation probably would not have been recognized, but I would otherwise have been the same researcher.

Was there a particular paper that significantly influenced your research?

I’d like to mention two that really changed my perspective at critical times. The first is ICE: A Robust Framework for Learning Invariants by Garg, Löding, Madhusudan & Neider from CAV'14. I was trying to find a “VC dimension”—a notion for learning theory—for invariants, an intrinsic quantity that would predict how many SAT queries are required for the solver to find an invariant, and failing miserably at that. It then dawned on me that my failure had a very good reason, that the VC dimension is appropriate for passive learning from examples, whereas invariant inference algorithms actively choose the query to the solver. This is an observation I had first come across in the CAV'14 paper, and what led me to look into Angluin’s learning with queries, which inspired our query-based learning models for invariant inference and separation results on them, showing the power of expressive SAT queries.

The second paper is in learning theory, Exact Learning Boolean Functions via the Monotone Theory by Nader H. Bshouty in Information & Computation 1995, one of the most celebrated results in the theory of learning with queries. At some point I was thinking about IC3/PDR, and had arrived at an interesting operator on formulas that I thought was related to how IC3 generalizes from counterexamples. The definition was somewhat complicated, so I was trying to investigate some simple properties of the operator. I suddenly got a feeling that the list of properties was very familiar… I did not see that coming, but the operator turned out to be equivalent to the main technical workhorse in Bshouty’s monotone theory. This was the key to showing connections between IC3/PDR and abstract interpretation in a new domain that can be defined using this operator from the monotone theory.

You are now working on computational chemistry. What is your current research topic, and what made you switch?

I’m working with Prof. Barak Hirshberg at the School of Chemistry, Tel Aviv University, Israel, on path integral molecular dynamics simulations—a cool technique to compute properties of quantum systems by classical simulations. We managed to reduce the complexity of treating bosonic exchange effects (you see, I’ve learned to use some of the words!) from O(N³) to O(N²), enabling simulations of more than 1000 particles in this way. That was a big deal for us.

I wanted to learn something new and different and be forced to interact with other sciences. I now feel stupid all the time, as opposed to my PhD, when I felt stupid most of the time. It’s very liberating, often exhausting.

Do you see connections between your work in verification and in computational chemistry?

Definitely. One work I was lucky to have had the opportunity to contribute to is led by Chaviva Sirote-Katz and Prof. Yair Shokef. With Prof. Guy Cohen, we used a SAT solver to help answer questions about what defects are possible in certain combinatorial metamaterials. These are synthetic materials formed by placing building blocks with potentially different orientations in a lattice. The SAT solver helped in proving that certain sets of defects—locations of internal inconsistency in the response of the material to applied stress—cannot be formed by any of the exponential number of metamaterials. Being able to solve problems I had never imagined with the tools I knew is very rewarding. I have big plans for using tools from formal methods in electronic structure calculations—I do hope they will work!